Tab Clearning (October 7 2024)

Tab Clearing October 7 2024

New Reka Flash model - I’m excited to see them ticking along, doing what looks like good work making evals then improving on them (e.g. longer context, instruction chaining). Also, seems like they’ll soon have audio in -> audio out functionality, it’s already in beta.

Fast Forwarding Low Rank Training - A funny technique: you do and SGD step then re-apply that same update again and again until the model stops improving on some tiny val set. Suprisingly they say this reduces total flops… I sort of feel if you’re doing DoRA/LoRA on a small dataset you don’t really care about flops and I’d rather show 10x the samples but who knows maybe useful.

The Moshi paper (PDF) - They wrap an audio model around a pre-trained text backbone, and have it produce text along with audio (‘inner monolog’). Haven’t read properly yet.

https://llamacoder.together.ai/ - together + meta make a claude artifacts alternative. My first test it was impressively close to correct (got some functionality, and to be fair claude also didn’t get this one-shot). I like that it’s a single click to publish app (here’s mine) and to open in code sandbox. Very neat implementation! Meta’s blog on the subject.

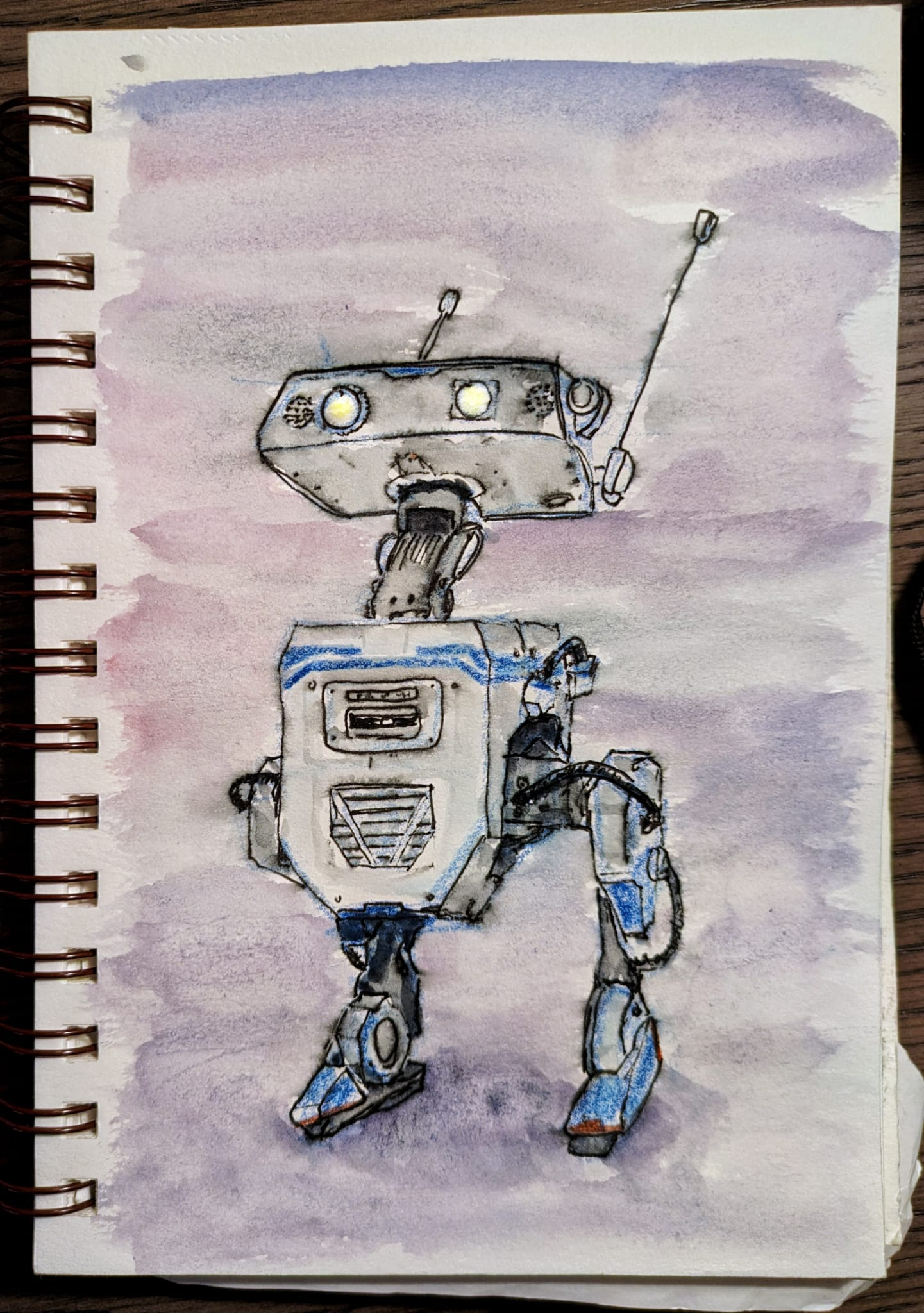

Open Duck Mini. This robot won’t get out of my head. OG DIsney paper with their design: here. My fan art from when I saw one in person at Neurips included at the end of this post.

Bauble studio - FUn little SDF coding tool.

Liquid AI have… something? Not too sure what their ‘Language LFMs’ are of how suspicious I should be, awaiting more detail before I waste time trying to read the tea leaves.

https://www.interconnects.ai/p/riley-goodside-on-science-of-prompting a podcast I haven’t listened to yet.

https://walzr.com/bop-spotter Love this project! “I installed a box high up on a pole somewhere in the Mission of San Francisco. Inside is a crappy Android phone, set to Shazam constantly, 24 hours a day, 7 days a week. It’s solar powered, and the mic is pointed down at the street below.”

https://simonwillison.net/2024/Oct/1/openai-devday-2024-live-blog/ Simon making the most useful live feed I’ve seen for an event like this. His follow-on post https://simonwillison.net/2024/Oct/2/not-digital-god/ is also great.

https://soumith.ch/blog/2024-10-02-training-10k-scale.md.html Soumith jots down some good notes on training at scale.

https://ai.meta.com/research/movie-gen/ Meta’s amazing new video model. Impressive results, especially (imo) useful is the editing capability which they get by jointly training to generate video and to edit images, getting video edits ‘for free’. I need to look into the very detailed paper (PDF).

https://blogs.microsoft.com/blog/2024/10/01/an-ai-companion-for-everyone/ Microsoft are rolling out a new iteration of copilot for everything, a way for lots of people to try something like advanced voice mode I guess. It’s frustrating though - the version I have on my windows desktop can’t browse, call functions, see the screen or really do anything besides yap. I’m realizing voice + functions is amazing, voice alone is useless for many applications (but still nice for some).

https://lunar-joke-35b.notion.site/SigLIP-Paper-hola-sigmoid-7ed58a7108a04ddb99571cded0922386 is a nice promer on SigLIP and why it’s a better training approach than the softmax-based CLIP version.

https://jake.fun/ Love finding great blogs/personal sites like this, where people joyfully play. Definitely adding this to my list of faves.

https://codepen.io/fand/pen/Vwojwqm - I’ve been playing with coding up some different webcam effects (inspired by posy’s mostion extraction video to start with) and this datamosh effect is trippy and great!

CAX: Cellular Automata Accelerated in JAX Cool CA paper with a bunch of fun experiments, I should try this library next time I get the NCA itch.

VinePPO: Unlocking RL Potential For LLM Reasoning Through Refined Credit Assignment Monte Carlo-based estimates instead of large value networks. I like this idea, need to read the paper carefully at some point this week.

HelpSteer2-Preference: Complementing Ratings with Preferences Alignment: should you use ratings or preferences? This paper: Why not both :) On the reading list it goes.

The Perfect Blend: Redefining RLHF with Mixture of Judges Meta show off fancy post-training, looks promising, on the reading list too.

I just want to make an app based on this tweet for fun. (Cool 2-D visualization of rubiks cube)

Cool-looking RL conf talk I need to watch, @pcastr gives me the feeling smart people are working on RL and it is getting more sensible by the year.

Evaluation of OpenAI o1: Opportunities and Challenges of AGI Bunch of evals on o1 (preview). To read, maybe.

Fields Of The World a giant dataset of labelled fields, open source, man I worked on something like this task back in the day this is so good to see!

Ctrl-X: Controlling Structure and Appearance for Text-To-Image Generation Without Guidance - A “simple training-free and guidance-free framework for text-to-image (T2I) generation with structure and appearance control” Very cool! Training free controlnet. I should make a demo for this if there isn’t one already.

LLMs Still Can’t Plan; Can LRMs? A Preliminary Evaluation of OpenAI’s o1 on PlanBench some sort of eval for planning, might be interesting, not reading for now.

https://www.danielcorin.com/posts/2024/claude-3.5-sonnet-connections-evals/ Someone else playing with connections, good stuff.

https://www.anthropic.com/news/contextual-retrieval - Common sense, great results, love to see it! Anthropic doing good work.

I should look at this paper that claims to get rid of the over-saturation from CFG. And this one (Fine-Tuning Image-Conditional Diffusion Models is Easier than You Think on arxiv) that shows a better way to turn e.g. stable diffusion into a depth or normal (or …) prediction model. @jfischoff is good at finding these gems. Alas I don’t keep up with diffusion as much these days. But e.g. this may be relevant for audio diffusion models for audio quality fixing e.g.

When a language model is optimized for reasoning, does it still show embers of autoregression? An analysis of OpenAI o1 A follow-up to “Embers of Autoregression” with o1, looks worth digging into both at some point. From a skim o1 does seem like it’s starting to overcome some AR-based issues but a ways to go yet.

Videos I still might watch: def con talk on ai from head of security at openai, fireside chat with sam, dev day latent space podcast

3D printing related videos I want to replicate: fractal vise, filament bearings (very neat idea).

And now as promised, robot fan art.